How OpenAI can defend itself against LLM competition

Developer whiplash is bad for companies selling access to language models. So how should a company like OpenAI defend itself against this?

It’s common to hear the word “commoditization” when discussing large language models.

Language models have become plug-in-play and easily interchangeable. When a new model boasts better performance, swathes of developers rush over to extract the extra margin. And when their original model provider ships an update to recapture the lead, they rush back.

This is particularly relevant this week, which featured launches of Gemini 1.5 Pro, improved GPT-4 turbo, and news from Llama and Mistral. And that’s after Claude 3 went viral for state of the art performance less than one month ago.

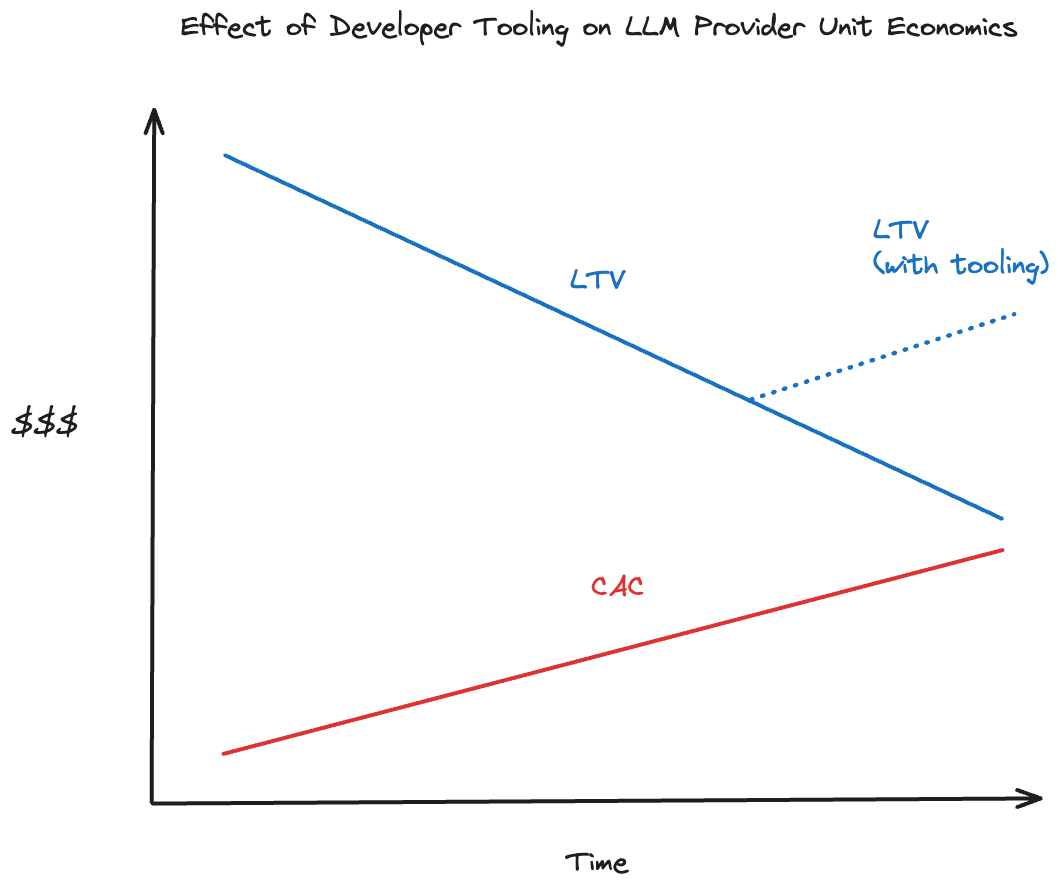

This kind of developer whiplash is obviously bad for companies selling access to language models. Spending money to train models and acquiring new users (e.g. through free credits) is a leaky bucket if developers can bolt any time for a suddenly more attractive competitor.

So how can an LLM provider like OpenAI defend itself against this?

The long term success of OpenAI’s API business may not come from the quality of the models but rather from developer tooling.

Developers using LLMs in their products face very unique challenges. Language outputs are very hard to monitor for quality assurance. How do you as a developer know when your product is hallucinating or outputting unhelpful results in production for certain user queries? Offline analytics are necessary for measuring real time model performance.

It’s also hard to evaluate product changes. Even small prompt changes can lead to drastically different outputs. Being able to experiment prior to launch is crucial for ensuring a smooth product experience. Experimentation requires a toolset for evaluating and monitoring LLM outputs.

This has become the most obvious pain point for LLM developers. As a result, swathes of startups have already flooded the space of LLM developer tooling.

But to build long term customer retention, it makes a whole lot of sense of OpenAI and other LLM providers to build their own in house solution.

A good LLM tooling solution needs a few things

A code integration to allow logging

A method of running recurring ‘eval’ scripts on the resulting logs

An experimentation playground to back test new prompts

A dashboard to show results

OpenAI can build a solution that’s inherently better than its competitors’. On each of the above dimensions, OpenAI is well suited to offer a complete developer experience.

(1) Developers are already calling OpenAI’s API, and so logs are already being sent to OpenAI and can used for analytics without any additional work from the developer. This means OpenAI users could easily release an out of the box monitoring and analytics solution built in to their current API calls. That’s very attractive, especially to startups who would love to avoid additional complexity.

(2) While in the past, OpenAI has struggled to scale infrastructure to keep up with demand, developers supply their own API keys to run eval scrips with third party vendors. Meaning, these workflows are already running on OpenAI infrastructure. Making such a solution out of the box may entice many developers who weren’t previously running any analytics to start doing so, but infrastructure problems can’t be a long term concern.

(3) OpenAI already has a prompt playground which many people still use for testing new prompts. In fact, Tyler Cowen likes using this playground instead of the actual ChatGPT interface!

(4) Similarly, OpenAI already has a dashboard for showing usage metrics. While it’s currently geared towards showing pricing information, it could easily be extended to show monitoring results. Having a source of truth dashboard that includes pricing with monitoring in the same dashboard would be a terrific experience for developers!

Given this, it’s quite feasible for OpenAI to build a great monitoring and analytics solution for LLM developers.

But why focus on this over a countless list of other priorities?

Building an out of the box monitoring solution for LLM developers will create an entire developer experience around their API. Development teams will rely on OpenAI not just for LLM access but also logging, evaluation, experimentation, QA and more.

Switching to a different monitoring and analytics tool requires moving over historical data, reaching parity on configurations, and training teams to re-learn workflows that they’ve already become accustomed to. That’s an expensive process for teams.

This will make it much less attractive for teams to switch between models for short term quality gains. Instead, many would opt to wait for their current model provider to release their own update that will likely surpass the quality of any intermediate competitor model. Therefore, models will need to have significantly higher performance to convince their competitors’ customers to switch.

By prioritizing LLM developer tooling, OpenAI can make the leap from commodity to ecosystem.

If you like this article, consider subscribing or following me on Twitter!